How to Unlock the Power of AI Translation Models

Language barriers have always posed a significant challenge to effective communication, whether it’s in business, advertising, marketing and other forms of digital media. However, the most recent advancements in Artificial Intelligence (AI) have paved the way for remarkable breakthroughs in translation technology.

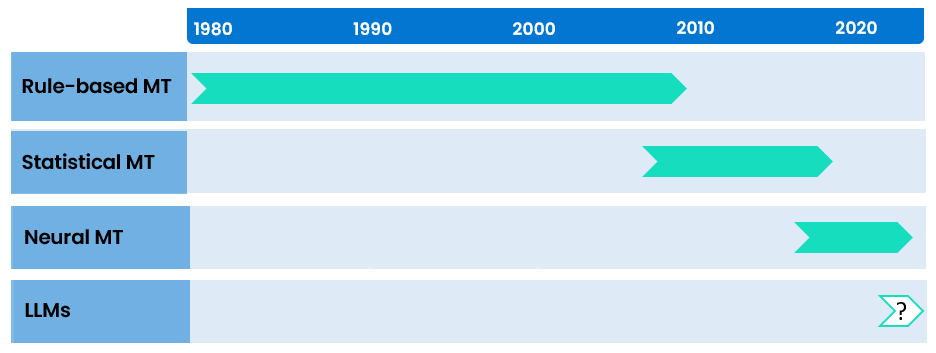

Rule-based Machine Translation (MT) engines were made commercially available in the early 1990s, when their use became widespread across the language industry. Taking the torch from Rule-based MT is Statistical MT, which was the dominant technology for more than 10 years up until around 2017. However, more recently, Neural Machine Translation (NMT) has become the industry-standard AI engine type and is currently in its sixth year of reign, despite a lurking paradigm shift that may not be too far away.

Caption: Dominant automated translation technologies have changed over time.

The model that has the entire online community’s attention since early 2023 is Large Language Models (LLMs) such as ChatGPT, which are generic language models trained to perform many tasks. At their current speed of evolution, and dependent on being tuned for purpose, LLMs could soon replace NMT as the dominant technology for AI translation.

Below, Nicola Pegoraro, Content Delivery Director at Locaria, shares some of his insights from more than 15 years’ experience of working with language-based AI models. Concentrating on their strengths and weaknesses, the aim is to guide companies on how they can utilise AI to streamline processes and bridge linguistic divides.

How Do These Models Work?

1) Rule-based MT: Rule-based MT relies on predefined linguistic rules and dictionaries to translate text from one language to another. These rules are crafted by linguists and language experts, enabling precise translations. However, the rigidity of the rule-based approach often limits its ability to handle complex linguistic nuances and adapt to evolving languages.

2) Statistical MT: Statistical MT takes a data-driven approach, analysing vast amounts of bilingual texts to identify patterns and probabilities. By leveraging statistical models, this approach provides more flexibility and better handling of context. However, it still struggles with idiomatic expressions and understanding subtle linguistic nuances.

3) Neural MT: Neural MT has emerged as a game-changer in translation technology. By utilising neural networks, this model learns from vast amounts of training data to generate translations. Neural MT excels in capturing context, idiomatic expressions, and language nuances. It can adapt to language variations and improve its performance over time. However, it requires significant computational resources and extensive training data to achieve optimal results.

4) Language Model-based (LLM) Translation: The latest addition to the AI translation landscape is language model-based (LLM) translation. These models, like OpenAI’s GPT-3.5, are trained on a massive corpus of text and can generate translations based on context and language understanding. LLM models offer remarkable flexibility, as they can handle multiple language pairs and adapt to various domains. They excel in producing natural-sounding language, but their accuracy is still a question mark.

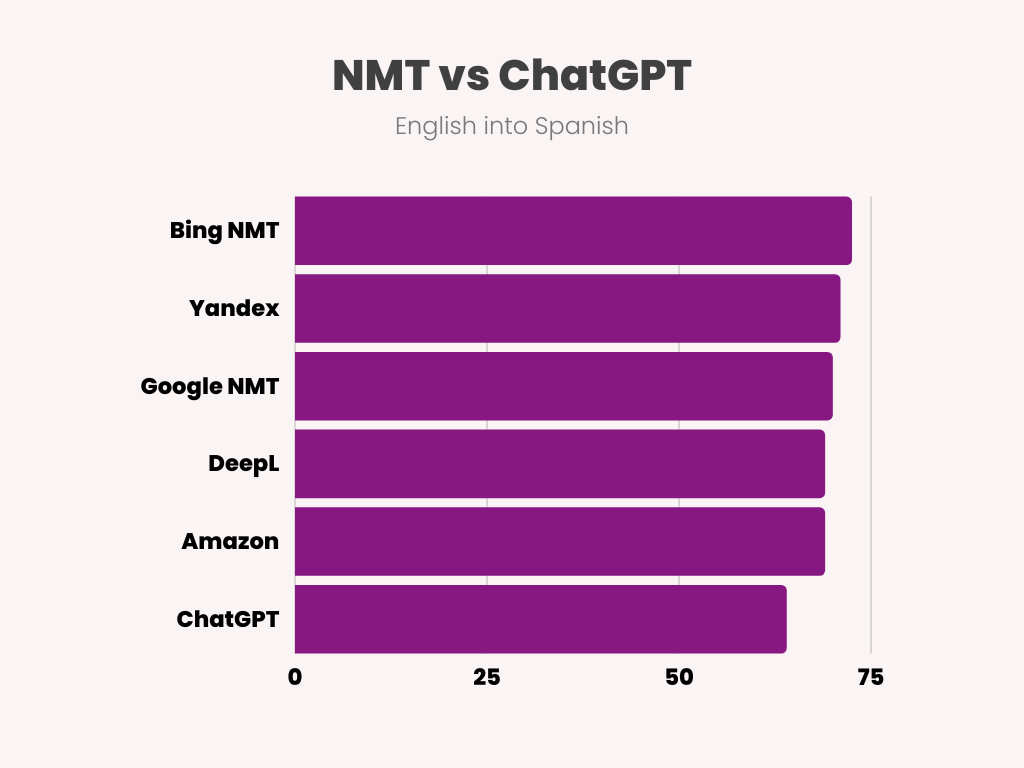

Caption: Neural Machine Translation (NMT) is currently the methodology offering the best results. However, LLMs (for example, ChatGPT) have the potential to disrupt the status quo if trained for translation, and with a more multilingual corpus.

Caption: Neural Machine Translation (NMT) is currently the methodology offering the best results. However, LLMs (for example, ChatGPT) have the potential to disrupt the status quo if trained for translation, and with a more multilingual corpus.

As impressive as these AI translation models are, it is crucial to understand that they are tools designed to assist human users rather than replace them. While AI models have made significant strides in language translation, they are not flawless and may occasionally produce errors or miss the mark in capturing context-specific meanings. Therefore, the role of human translators remains essential in quality assurance, editing, and fine-tuning the output generated by these models.

However, by understanding how these AI engines work, we can appreciate the different approaches they employ to tackle the complexities of language translation. Each model has its strengths and weaknesses, and their combination with human expertise holds the key to unlocking the true potential of AI-powered language translation.

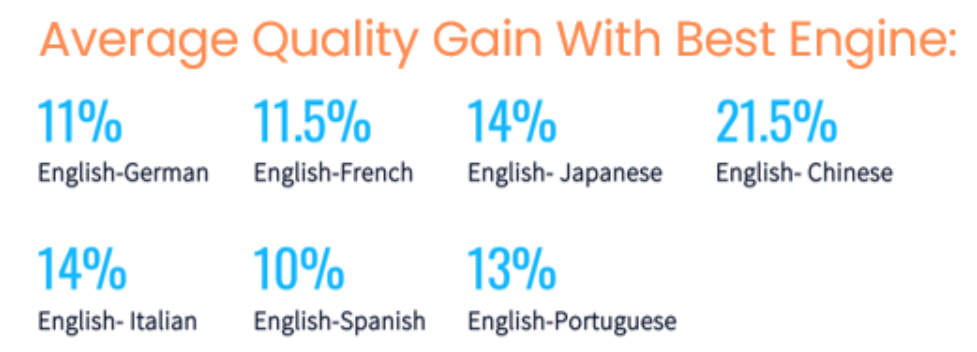

Caption: Comparison of automated translation quality using a MTQE (Machine Translation Quality Editor) methodology via Phrase.

The Future of AI Translation

Our analysis is that the NMT engine market is essentially fragmented, with results that differ over time and can vary per language combination and industry. The available LLMs offer a low degree of accuracy, which is a big blocker within the language industry. For example, ChatGPT has been trained on 93% English content, which is not yet adequate for multilingual content needs. However, the solutions of the future are likely to be a mix of specialised LLMs and adaptive NMT, integrating hybrid models to tackle long-standing issues like formality levels and tone of voice.

The rise of AI translation models has transformed the way we communicate across languages. From rule-based and statistical models to neural MT and language model-based translation, each approach brings unique strengths and limitations. As language technology continues to evolve, the combination of AI models and human expertise offers the most promising path forward. By embracing these powerful tools and leveraging human ingenuity, we can break down language barriers and foster global communication like never before.

Contact the Locaria team to find out more on how your company can integrate AI translation tools as part of your content production model.

Writer: ChatGPT

Insights: Nicola Pegoraro

Post-edit: Daniel Purnell